I could not stop reading this article that I happened to chance upon last night. Since reading this, I could not help but be anxious about the intelligence explosion scenario he discusses and the consequences of unsafe/unplanned and out of control impending(?) superintelligence. The sense of urgency exhibited in the author’s text emphasizing the importance of having security and protection against the model weights and algorithmic secrets before it is too late, hits hard.

The author, a researcher, Leopold Aschenbrenner seems a very knowledgeable individual in the field of AI and technological advancement. He was with Superalignment team at OpenAI(now fired – not sure if its pre/post this article or even the reason why?) .

Several factors contributing to the sense of urgency discussed in his article

Rapid pace of AI advancements and projections for significant increases in AI capabilities over the coming decade. This rapid progression, including potential advancements towards superintelligence, likely instills a sense of urgency about understanding and managing these developments.

Geopolitical implications of AI advancement. The possibility of a race (a war?) to achieve AI superiority between nations, especially if one country gains a significant lead, could intensify the urgency to ensure responsible development and deployment of AI technologies.

Security risks associated with AI development, including the potential for malicious use or unintended consequences hence the urgency to address security risks and prevent adversarial actors from exploiting AI advancements.

Ethical and governance challenges related to AI, ensuring alignment with human values, mitigating risks of AI systems going rogue, and establishing appropriate regulatory frameworks. These challenges require proactive and thoughtful approaches, contributing to a sense of urgency in addressing them.

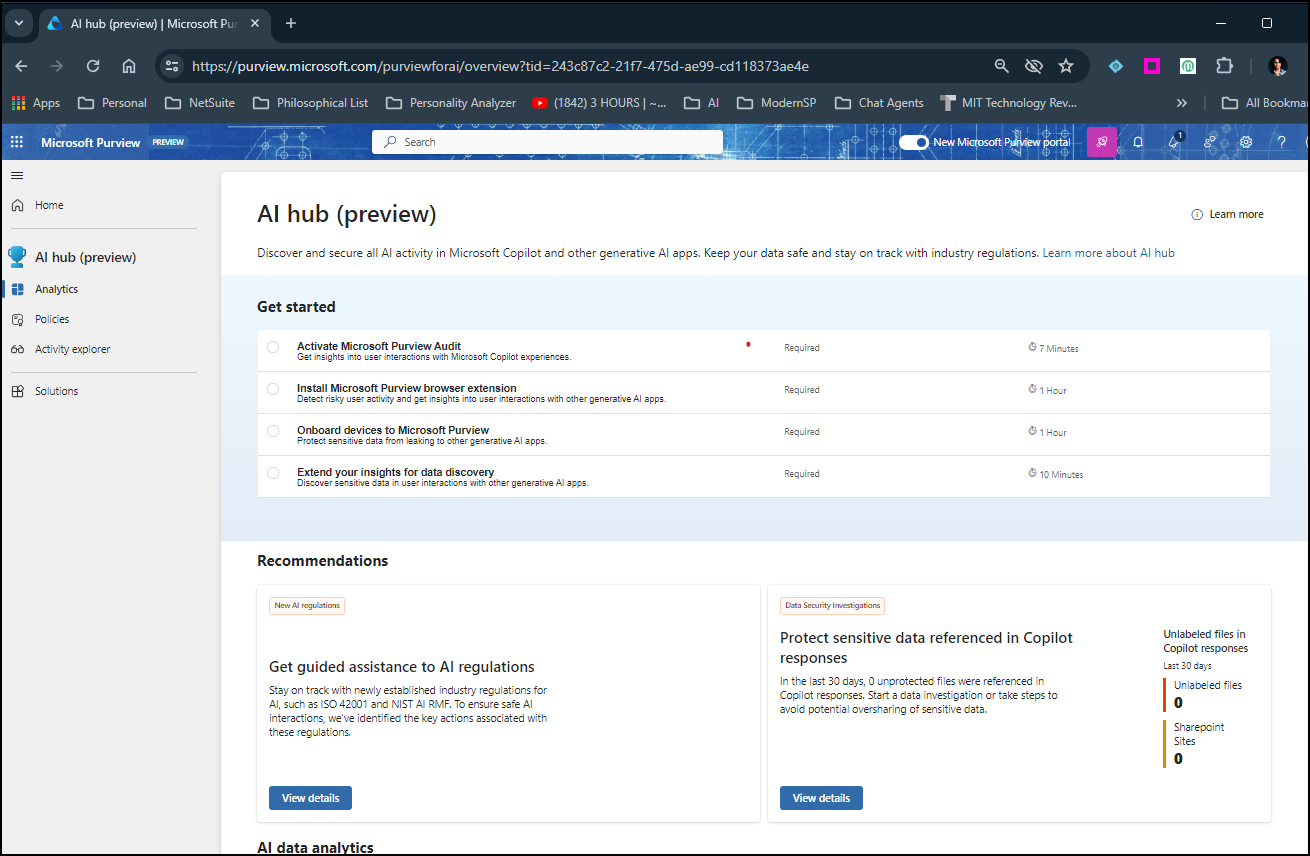

This article is very well written, a MUST read for everyone in my opinion. The images/graphs included throughout the article drive home the point of immediate need of situational awareness as well as security measure that need to be put in place to govern AGI. Below images stood out for me –

RLHF – Reinforcement Learning from Human Feedback

- The pace of progress in AI is projected to accelerate rapidly, with estimates suggesting multiple orders of magnitude (OOMs) increases in effective compute over the decade.

- Concerns about AI development include issues of security, potential for rogue behavior, and the geopolitical implications of AI advancement, particularly in relation to China’s efforts in the field.

Interview of the author on YouTube:

Discover more from QubitSage Chronicles

Subscribe to get the latest posts sent to your email.